Internal Articles

Boosting Your Application's Performance and Scalability

Caching Patterns

Caching is a commonly used technique in information technology to improve application performance and reduce the load on backend systems. By storing frequently accessed data in a cache, applications can avoid the overhead of repeatedly accessing slower storage systems like databases or file systems.

In this article, we’ll discuss the main caching patterns that are commonly used in IT, and the benefits and drawbacks of each. We’ll also explore some of the main challenges of caching and how to overcome them.

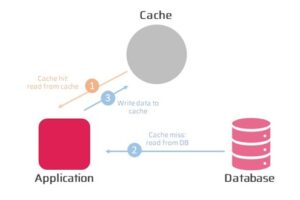

Cache-Aside Pattern

The Cache-Aside pattern is one of the most commonly used caching patterns. In this pattern, the application accesses the cache directly rather than going through a middleware layer. When the application needs data, it first checks the cache. If the data is not in the cache, the application fetches it from the backend system and then stores it in the cache for future use.

The Cache-Aside pattern is simple and easy to implement, and it provides a good balance between cache hit rates and data consistency. However, it also requires careful management of cache size and cache eviction policies to avoid overloading the cache or storing stale data.

Read-Through Pattern

The Read-Through pattern is another commonly used caching pattern. In this pattern, the application accesses the cache indirectly through a middleware layer. When the application needs data, it sends a request to the middleware, which first checks the cache. If the data is not in the cache, the middleware fetches it from the backend system and stores it in the cache before returning it to the application.

The Read-Through pattern is useful when the application needs to access data that is expensive to fetch from the backend system, and when the middleware layer can add additional functionality like data transformation or caching logic. However, it can also add additional latency and complexity to the application architecture.

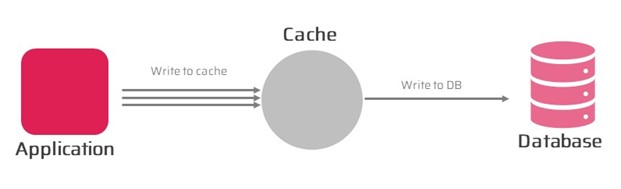

Write-Through Pattern

The Write-Through pattern is a caching pattern that is used to keep the cache in sync with the backend system. In this pattern the application writes data to the cache middleware layer that then writes it to the backend system synchronously. This ensures that the data in the cache is always consistent with the data in the backend system.

The Write-Through pattern is useful when data consistency is critical, and when write operations are infrequent. However, it can also add additional latency to write operations and increase the load on the backend system.

Write-Behind Pattern

The Write-Behind pattern is an extension of the Cache-Aside pattern that provides a strategy for handling write operations. In this pattern, when the application writes data to the cache, the cache immediately returns a success response to the application without waiting for the data to be written to the backend system. The cache then asynchronously writes the data to the backend system.

The Write-Behind pattern can help reduce the latency and improve the throughput of write operations. However, it also introduces the risk of data inconsistency if the data is updated in the cache before it is written to the backend system.

Write-Around Pattern

The Write-Around pattern is a caching pattern that bypasses the cache for write operations. In this pattern, when the application writes data, it writes it directly to the backend system, bypassing the cache entirely.

The Write-Around pattern is useful when the application is performing a large number of write operations that do not benefit from being cached. However, it can reduce the overall cache hit rate and increase the load on the backend system.

Refresh-Ahead Pattern

The Refresh-Ahead pattern is a caching pattern that proactively refreshes data before it is requested by the application. In this pattern, the cache periodically refreshes data in the background to ensure that it is up-to-date and readily available when the application needs it.

The Refresh-Ahead pattern is useful when the application requires up-to-date data and when the cost of refreshing the data is lower than the cost of accessing it from the backend system. However, it can also increase the load on the backend system and consume additional network bandwidth.

To implement the Refresh-Ahead pattern, the cache must periodically refresh the data using a background job or a dedicated thread. The frequency of the refresh should be carefully tuned to balance the benefits of having up-to-date data with the costs of refreshing it.

In some cases, it may be necessary to implement additional logic to prevent excessive refreshing of data that is rarely accessed or that does not change frequently. For example, the cache may use a time-to-live (TTL) or a cache invalidation mechanism to limit the frequency of refreshing data.

Overall, the Refresh-Ahead pattern can help improve the performance of applications by reducing the latency of data access and ensuring that up-to-date data is readily available. However, it requires careful tuning and management to avoid overloading the backend system or consuming excessive network bandwidth.

| Pattern | Resume | Advantages | Disadvantages |

| Cache-Aside | Data is loaded into cache on demand when requested by the application. | Easy to implement, low cache miss rate. | High latency for first cache miss, stale data if not invalidated. |

| Read-Through | Data is automatically loaded into cache when it is requested by the application. | Low latency, automatic data population. | High network and backend load, stale data if not invalidated. |

| Write-Through | Data is written to the cache and backend system simultaneously. | Data consistency, low cache miss rate. | High write latency, high backend load. |

| Write-Around | Data is written to the backend system but not to the cache. | Low cache pollution, low write latency. | High read latency for cold data, no cache performance improvement. |

| Write-Back | Data is written to the cache and asynchronously written to the backend system. | Low write latency, improved write performance. | Potential data loss in case of cache failure, high cache pollution. |

| Refresh-Ahead | Data is proactively refreshed in the cache before it is requested by the application. | Low data access latency, up-to-date data. | High backend load, potential network congestion. |

Each caching pattern has its own strengths and weaknesses. The choice of which pattern to use depends on the specific requirements and characteristics of the application. For example, if the application requires low latency to frequently accessed data, the Read-Through or Cache-Aside patterns may be a good choice. If data consistency and low cache miss rates are important, the Write-Through pattern may be preferred. On the other hand, if the application deals with large volumes of infrequently accessed data, the Write-Around pattern may be a better fit.

In any case, it is important to carefully evaluate the trade-offs of each caching pattern and choose the one that best fits the specific needs of the application.

Challenges of Caching

While caching can provide significant performance benefits to applications, there are several challenges and difficulties that must be addressed to ensure its effectiveness.

Types of Data to Cache

Choosing which data to cache can be a difficult task, as not all data is equally important or beneficial to cache. Caching too much data can result in wasted resources and increased cache pollution, while caching too little data can result in high cache miss rates and reduced performance.

To address this challenge, it is important to carefully evaluate the data access patterns and characteristics of the application and choose the data that is most frequently accessed or has the highest impact on performance.

Data Coherence

Another challenge of caching is maintaining data coherence, or ensuring that the data in the cache is consistent with the data in the backend system. Data coherence is particularly important in applications that require data consistency or that deal with frequently updated data.

To address this challenge, caching mechanisms must be implemented to ensure that the data in the cache is regularly updated or invalidated when changes occur in the backend system. This can include using techniques such as time-to-live (TTL) values, cache invalidation, or using distributed caching systems that can synchronize data across multiple cache instances.

By addressing these challenges and implementing caching mechanisms that are tailored to the specific needs of the application, it is possible to achieve significant performance improvements and scalability benefits.

Let’s Talk Caching!

Caching may not be as exciting as flying cars or teleportation, but it’s still an essential component of modern software systems. If you’re looking to optimize your application’s performance and scalability, caching is a great place to start.

At AdvanceWorks, we have a team of caching wizards who can help you implement caching patterns that are tailored to the specific needs of your application. From Cache-Aside to Refresh-Ahead, we’ve got you covered.

So, if you’re ready to take your application’s performance to the next level, give us a shout! We promise not to speak in binary or use too many acronyms.

| Tiago Jordão Solutions Architect at AdvanceWorks. I’m a software architect, but that’s just my day job. By night, I’m a quality control vigilante, making sure every line of code is up to my exacting standards. I’m also a bit of a perfectionist, but don’t worry, I’m working on it. |